|

| def | __init__ (self, states_df, score_type, max_num_mtries, num_starts=10, ess=1.0, verbose=False, vtx_to_states=None) |

| |

| def | restart (self, mtry_num) |

| |

| def | cache_this (self, move, score_change) |

| |

| def | empty_cache (self) |

| |

| def | __init__ (self, states_df, score_type, max_num_mtries, ess=1.0, verbose=False, vtx_to_states=None) |

| |

| def | climb (self) |

| |

| def | do_move (self, move, score_change, do_finish=True) |

| |

| def | refresh_nx_graph (self) |

| |

| def | would_create_cycle (self, move) |

| |

| def | move_approved (self, move) |

| |

| def | finish_do_move (self, move) |

| |

| def | restart (self, mtry_num) |

| |

| def | cache_this (self, move, score_change) |

| |

| def | empty_cache (self) |

| |

| def | __init__ (self, is_quantum, states_df, vtx_to_states=None) |

| |

| def | fill_bnet_with_parents (self, vtx_to_parents) |

| |

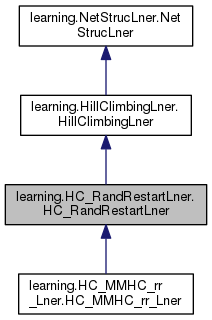

HC_RandRestartLner (Hill Climbing Random Restart Learner) adds to its

parent class HillClimbinmgLner a restart() function for random restarts.

Once a local maximum is reached, a random move ('restart') is made and

then from that new starting point the system is again driven towards a

possibly new local maximum. One can specify the number of random starts

desired. The local maximum with the highest score is selected at the end.

References

----------

1. Nicholas Cullen neuroBN at github

Attributes

----------

is_quantum : bool

True for quantum bnets amd False for classical bnets

bnet : BayesNet

a BayesNet in which we store what is learned

states_df : pandas.DataFrame

a Pandas DataFrame with training data. column = node and row =

sample. Each row/sample gives the state of the col/node.

ord_nodes : list[DirectedNode]

a list of DirectedNode's named and in the same order as the column

labels of self.states_df.

max_num_mtries : int

maximum number of move tries

nx_graph : networkx.DiGraph

a networkx directed graph used to store arrows

score_type : str

score type, either 'LL', 'BIC, 'AIC', 'BDEU' or 'K2'

scorer : NetStrucScorer

object of NetStrucScorer class that keeps a running record of scores

verbose : bool

True for this prints a running commentary to console

vertices : list[str]

list of vertices (node names). Same as states_df.columns

vtx_to_parents : dict[str, list[str]]

dictionary mapping each vertex to a list of its parents's names

mcache : list[tuple[str, str, str]]

a list that stores all moves of a try. This list is cleared at

beginning of each try.

score_ch_cache : list[tuple[float, float, float]]

A list that stores the score changes of each move of a try. Score

changes here are given as a 3-tuple of 3 floats, (beg_score_ch,

end_score_ch, tot_score_ch).

best_start_graph : networkx.DiGraph

best_start_score : float

Each time restart() function is called, the current tot score is

compared with best_start_score, and if it is higher, then both the

current nx_graph and its score are stored in best_start_graph and

best_start_score.

cur_start : int

current start. Increases by one every time restart() is called.

num_starts : int

number of starts. Number of times minus 1 that restart function is

called.

| def learning.HC_RandRestartLner.HC_RandRestartLner.restart |

( |

|

self, |

|

|

|

mtry_num |

|

) |

| |

This function takes in mtry_num and returns (restart_approved,

0). Zero is intended to be the next mtry_num, so mtry_num is set to

zero. Also the cur_start num is increased by one. All restarts are

approved as long as they don't exceed num_starts in number.

The cur total score is compared with best_start_score and it is

higher, then current nx_graph and its score are stored as best so far.

The function selects uniformly at random a move from mcache (mcache

is a list of all non-optimal moves of last try, and score_ch_cache

is a list of the score changes of those non-optimal moves). This

random move is submitted to do_move(), so it is performed regardless

of its non-optimality. The hope is that this random restart will

carry the system from the attractor basin of the current local max,

to the attractor basin of another local max.

Parameters

---------

mtry_num : int

Returns

-------

bool, int

Public Member Functions inherited from learning.HillClimbingLner.HillClimbingLner

Public Member Functions inherited from learning.HillClimbingLner.HillClimbingLner Public Member Functions inherited from learning.NetStrucLner.NetStrucLner

Public Member Functions inherited from learning.NetStrucLner.NetStrucLner Public Attributes inherited from learning.HillClimbingLner.HillClimbingLner

Public Attributes inherited from learning.HillClimbingLner.HillClimbingLner Public Attributes inherited from learning.NetStrucLner.NetStrucLner

Public Attributes inherited from learning.NetStrucLner.NetStrucLner Static Public Member Functions inherited from learning.HillClimbingLner.HillClimbingLner

Static Public Member Functions inherited from learning.HillClimbingLner.HillClimbingLner Static Public Member Functions inherited from learning.NetStrucLner.NetStrucLner

Static Public Member Functions inherited from learning.NetStrucLner.NetStrucLner 1.8.11

1.8.11