|

Quantum Fog

0.9.3

|

|

Quantum Fog

0.9.3

|

Public Member Functions | |

| def | __init__ (self, states_df, alpha, verbose=False, vtx_to_states=None, learn_later=False) |

| def | learn_struc (self) |

| def | find_MB (self, vtx=None) |

| def | find_nbors (self) |

| def | orient_edges (self) |

| def | new_filled_nx_graph (self) |

| def | undo_cycles (self) |

| def | orient_undecided_edges (self) |

Public Member Functions inherited from learning.NetStrucLner.NetStrucLner Public Member Functions inherited from learning.NetStrucLner.NetStrucLner | |

| def | __init__ (self, is_quantum, states_df, vtx_to_states=None) |

| def | fill_bnet_with_parents (self, vtx_to_parents) |

Static Public Member Functions | |

| def | MB_lner_test (LnerClass, verbose=False) |

Static Public Member Functions inherited from learning.NetStrucLner.NetStrucLner Static Public Member Functions inherited from learning.NetStrucLner.NetStrucLner | |

| def | learn_nd_state_names (bnet, states_df) |

| def | import_nd_state_names (bnet, vtx_to_states) |

| def | int_sts_detector (sub_states_df) |

Public Attributes | |

| alpha | |

| verbose | |

| vtx_to_MB | |

| vtx_to_parents | |

| vtx_to_nbors | |

Public Attributes inherited from learning.NetStrucLner.NetStrucLner Public Attributes inherited from learning.NetStrucLner.NetStrucLner | |

| is_quantum | |

| bnet | |

| states_df | |

| ord_nodes | |

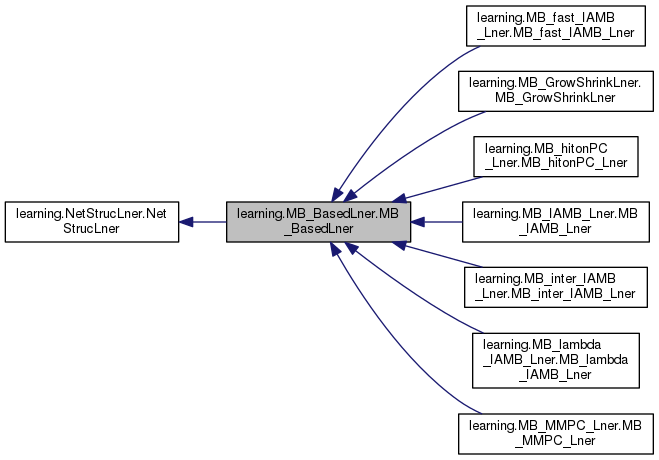

MB_BasedLner (Markov Blanket Based Learner) is an abstract class for

learning the structure of a bnet by first finding the markov blanket of

each node, then using that MB info to find the neighbors of each node,

then orienting the edges of each node with its neighbors. The procedure

for orienting the edges is not 100% effective and must be patched up (

it might introduce some cycles and leave some edges undecided. A

heuristic is introduced to patch things up.

The first MB based learner was Grow Shrink (referring to growing and

shrinking of the MB) by Margaritis. See Refs. 1 and 2 for his original

paper and his 2003 Thesis at Carnegie Mellon.

Many variations of Grow Shrink were introduced after it. In Quantum Fog,

Grow Shrink and all its variants are subclasses of this class,

MB_BasedLner, and their names all start with 'MB_' for easy

identification and so they all stay together in an alphabetical listing

of files.

Ref. 3, the PhD thesis of Shunkai Fu, was very helpful in writing the

MB_ classes, because it contains pseudo code for most of the MB_

algorithms. However, note that in that pseudo code, whenever it says I <

epsilon, it means that the conditional mutual info I > epsilon.

See Shunkai Fu Thesis if you want to know who invented each MB_

algorithm and in which papers they proposed it for the first time. The

References given below are not necessarily the first papers, but rather

papers wih good pseudo code

References

----------

1. D. Margaritis and S. Thrun, Bayesian Network Induction via Local

Neighborhoods Adv. in Neural Info. Proc. Sys. 12 (MIT Press, 2000)

2. D. Margaritis, Learning Bayesian Network Model Structure from Data,

Thesis 2003 (Carnegie Mellon Univ.)

3. Shunkai Fu, Efficient Learning of Markov Blanket and Markov Blanket

Classifier, Thesis 2010, UNIVERSITÉ DE MONTRÉAL

4. Jean-Philippe Pellet, Andre´ Elisseeff, Using Markov Blankets for

Causal Structure Learning (Journal of Machine Learning Research 9, 2008)

5. Nicholas Cullen, NeuroBN at Github

Attributes

----------

is_quantum : bool

True for quantum bnets amd False for classical bnets

bnet : BayesNet

a BayesNet in which we store what is learned

states_df : pandas.DataFrame

a Pandas DataFrame with training data. column = node and row =

sample. Each row/sample gives the state of the col/node.

ord_nodes : list[DirectedNode]

a list of DirectedNode's named and in the same order as the column

labels of self.states_df.

alpha : float

threshold used for deciding whether a conditional or unconditional

mutual info is said to be close to zero (independence) or not (

dependence). The error in a data entropy is on the order of ln(n+1)

- ln(n) \approx 1/n where n is the number of samples so 5/n is a

good default value for alpha.

verbose : bool

True for this prints a running commentary to console

vtx_to_MB : dict[str, list[str]]

A dictionary mapping each vertex to a list of the vertices in its

Markov Blanket. (The MB of a node consists of its parents, children

and children's parents, aka spouses).

vtx_to_nbors : dict[str, list[str]]

a dictionary mapping each vertex to a list of its neighbors. The

literature also calls the set of neighbors of a vertex its PC (

parents-children) set.

vtx_to_parents : dict[str, list[str]]

dictionary mapping each vertex to a list of its parents's names | def learning.MB_BasedLner.MB_BasedLner.__init__ | ( | self, | |

| states_df, | |||

| alpha, | |||

verbose = False, |

|||

vtx_to_states = None, |

|||

learn_later = False |

|||

| ) |

Constructor

Parameters

----------

states_df : pandas.DataFrame

alpha : float

verbose : bool

vtx_to_states : dict[str, list[str]]

A dictionary mapping each node name to a list of its state names.

This information will be stored in self.bnet. If

vtx_to_states=None, constructor will learn vtx_to_states

from states_df

learn_later : bool

False if you want to call the function learn_struc() inside the

constructor. True if not.

Returns

-------

None

| def learning.MB_BasedLner.MB_BasedLner.find_MB | ( | self, | |

vtx = None |

|||

| ) |

This function finds the MB of vtx and stores it inside vtx_to_MB[ vtx]. If vtx=None, then it will find the MB of all the vertices of the graph. This function is overridden by all the subclasses of this class (the ones with names starting with MB_). All the other functions called by learn_struc() are the same for most of the subclasses of this class. Parameters ---------- vtx : str Returns ------- bool

| def learning.MB_BasedLner.MB_BasedLner.find_nbors | ( | self | ) |

Finds for each vtx of the graph, a list of all its neighbors and puts that info into vtx_to_nbors. Returns ------- None

| def learning.MB_BasedLner.MB_BasedLner.learn_struc | ( | self | ) |

This is the orchestra conductor of the symphony. Each of the functions it calls does a lot. By the end, a whole bnet structure has been learned from the data and has been stored in self.bnet. Returns ------- None

|

static |

This static method gives a simple example that we use to test

MB_BasedLner and its subclasses (those starting with MB_). The

method takes as input training data generated from 2 graphs (the

classical versions of wetgrass and earthquake) and it outputs a

drawing of the learned structure.

Parameters

----------

LnerClass : MB_BasedLner or subclass

This is either MB_BasedLner without quotes or the name of a

subclass of that class.

Returns

-------

None

| def learning.MB_BasedLner.MB_BasedLner.new_filled_nx_graph | ( | self | ) |

This function fills nx_graph with the info found in vtx_to_parents. Returns ------- networkx.DiGraph

| def learning.MB_BasedLner.MB_BasedLner.orient_edges | ( | self | ) |

This function gives an orientation to some (not necessarily all) the undirected edges implied by vtx_to_nbors. The edge orientation info found by this function is stored by it in vtx_to_parents. Returns ------- None

| def learning.MB_BasedLner.MB_BasedLner.orient_undecided_edges | ( | self | ) |

When this function is called in learn_str(), the vtx_to_parents that has been learned so far may not include all of the edges implied by vtx_to_nbors. Hence, there might still be some undirected edges. This function uses a reasonable but not rigorous heuristic to orient those undecided edges. Returns ------- None

| def learning.MB_BasedLner.MB_BasedLner.undo_cycles | ( | self | ) |

When this function is called in learn_str(), the vtx_to_parents that has been leaned so far may imply (directed) cycles. This function uses a reasonable but not rigorous heuristic to reverse the direction of at least one arrow in each cycle and make it a non-cycle. Returns ------- None

1.8.11

1.8.11